Showing results for showall:

Results 1 - 23 of 23

Results filtered by data type.

-

faaa from czzzzz last updated 6 years agoOther

faaa

-

eeg_analyse from hangzhou dianzi university last updated 7 years agoOther

just a try

-

test from test last updated 7 years agoOther

test

-

Blur Image Sharpening from Kamitani Lab, Kyoto University last updated 8 years agoOther

Original paper: Abdelhack & Kamitani (2017) Top-down sharpening of hierarchical visual feature representations. bioRxiv. https://doi.org/10.1101/230078

-

Neurotycho: Monkey Anesthesia Task from Fujii Lab, RIKEN last updated 8 years agoECoG

Overview

This is ECoG data from Yanagawa, T., Chao, Z. C., Hasegawa, N., & Fujii, N. (2013). "Large-Scale Information Flow in Conscious and Unconscious States: an ECoG Study in Monkeys." PloS one, 8(11), e80845.

A monkey who was sitting still with its hard and arms restrained and was then anesthetized so as to lose consciousness.

TaskOne monkey was sitting calm with head and arm restrained. ECoG data were recoreded first with alert and later with anesthetic condition.

Data128-channel ECoG data were sampled at 1KHz.

Data are originally from http://neurotycho.org/anesthesia-task.

-

Speech Imagery Dataset from CREST last updated 8 years agoEEG OtherOverview

EEG data during vowel speech imagery for 3 subjects;Sample dataset from

Charles S. DaSalla, Hiroyuki Kambara, Makoto Sato, Yasuharu Koike, Single-trial classification of vowel speech imagery using common spatial patterns, Neural Networks, Volume 22, Issue 9, Brain-Machine Interface, November 2009, Pages 1334-1339, ISSN 0893-6080, DOI: 10.1016/j.neunet.2009.05.008.

TaskThree tasks: speech imagery of /a/ (ai) and /u/ (ui) and rest (re)

Data -

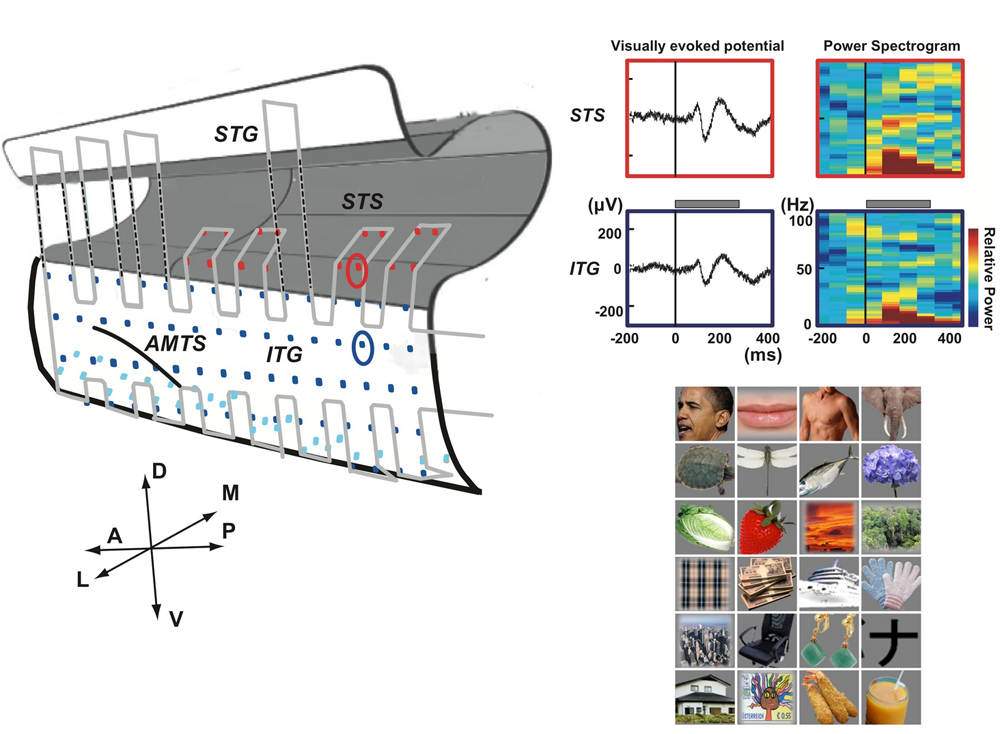

Monkey ECoG Visual Objects from Niigata University last updated 8 years agoECoG Other

Overview

TaskA macaque monkey was trained in a visual fixation task to keep the gaze within !^1!k from the fixation target. 24 photographs of objects from a wide variety of categories, including faces, foods, houses, cars, etc. were presented as stimuli. Each stimulus was presented for 300 ms followed by a 600-ms blank interval.

Data128-channel ECoG data from the temporal lobe, recorded at 1000 Hz are included.

-

Generic Object Decoding from Kamitani Lab, ATR last updated 8 years agoOther

-

Position Decoding from Kamitani Lab, ATR last updated 9 years agoOther

Overview

This is fMRI data from Majima, K et al. (2017) Position Information Encoded by Population Activity in Hierarchical Visual Areas

Demo code: GitHub/KamitaniLab/PositionDecoding

-

Visual Image Reconstruction from Kamitani Lab, ATR last updated 9 years agoOther

Overview

This is fMRI data from Miyawaki Y et al. (2008): Visual image reconstruction from human brain activity using a combination of multiscale local image decoders. Neuron. Dec 10;60(5):915-29. In this study a custom algorithm, sparse multinomial logistic regression was used to train decoders at multiple spatial scales and combine them based on a linear model, in order to reconstruct presented stimuli.

TaskThe experiment consisted of a human subject viewing contrast-based images of 10x10 flickering image patches. There were two types of image viewing tasks: 1. geometric and alphabet viewing and 2. random image viewing. For image presentation, a block design was used with rest periods between the presentation of each image. For viewing 10x10 image patches defining common geometric shapes or alphabet letters, image presentation lasted 12 s, followed by a 12 s rest. For random 10x10 image patch presentation, image presentation lasted 6 s, followed by a 6s rest.

DataVoxels from V1, V2, V3, V4, and VP cortex are shared in this data. All data are pre-processed and ready to use for machine learning.

-

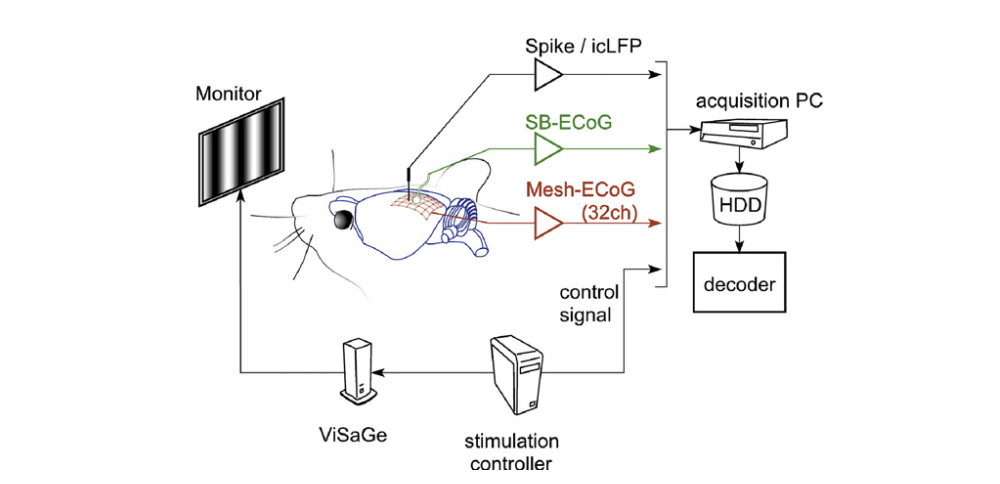

Rat Eye Stimulation from Niigata University last updated 9 years agoECoG Other

Overview

TaskThree rats had their eyes stimulated by a visual grating, with four task conditions: 1) left eye only, 2) right eye only, 3) both eyes stimulated, 4) neither eyes stimulated.

Data32-channel ECoG data from 3 rats, recorded at 1000 Hz are included.

-

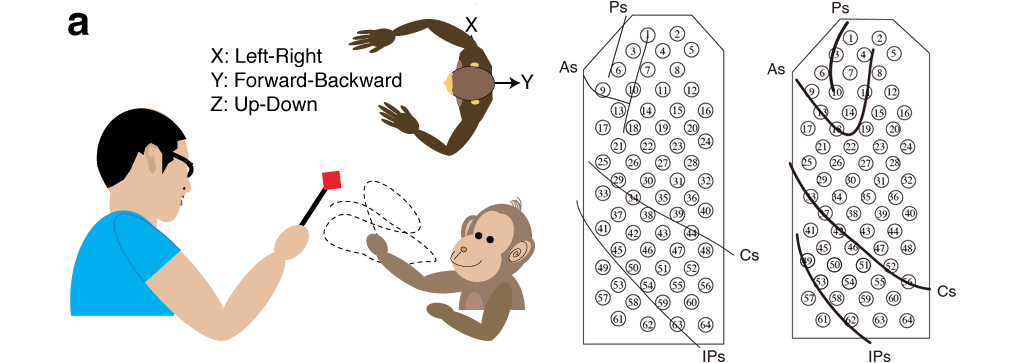

Neurotycho: Food Tracking Task (Subdural) from Fujii Lab, RIKEN last updated 9 years agoECoG Other

Overview

This is ECoG data from Shimoda K, Nagasaka Y, Chao ZC, Fujii N (2012): "Decoding continuous three-dimensional hand trajectories from epidural electrocorticographic signals in Japanese macaques." J. Neural Eng. 2012 9:036015.

TaskThe monkey was tracking food rewards with the hand contralateral to the implant side. Each monkey was trained to reach for food offered by the experimenter at irregular intervals.

DataECoG and motion data were sampled at 1KHz and 120Hz, respectively, with time stamps synchronized. Monkey B and Monkey C had 64-channel ECoG electrodes.

Data are originally from http://neurotycho.org/epidural-ecog-food-tracking-task.

-

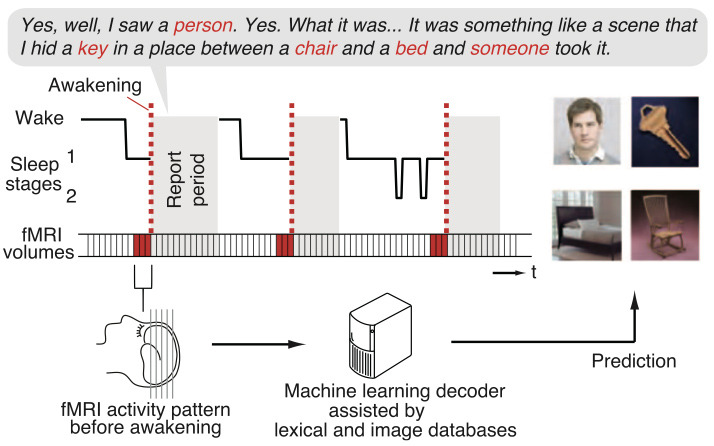

Human Dream Decoding from Kamitani Lab, ATR last updated 9 years agofMRI

Overview

TaskThree human subjects slept in an fMRI scanner. When a specific EEG pattern associated with dreaming was viewed, subjects were awakened and gave a verbal report describing the contents of the dreams they had. Dream contents were then matched to synsets in WordNet, and brain activity were labeled with the synsets. Training and test data were then divided and decoding analysis was performed to predict the contents of dreams (synsets) associated with brain activity.

DataVoxels from the whole brain, with masks for V1, V2, V3, lateral occipital complex (LOC), fusiform face area (FFA), parahippocampal place area (PPA), lower visual cortex (LVC), and higher visual cortex (HVC) regions-of-interests are shared in this data. All data are pre-processed and ready to use for machine learning.

-

Decoding Subjective Contents from the Brain from Kamitani Lab, ATR last updated 9 years agofMRI Other

Overview

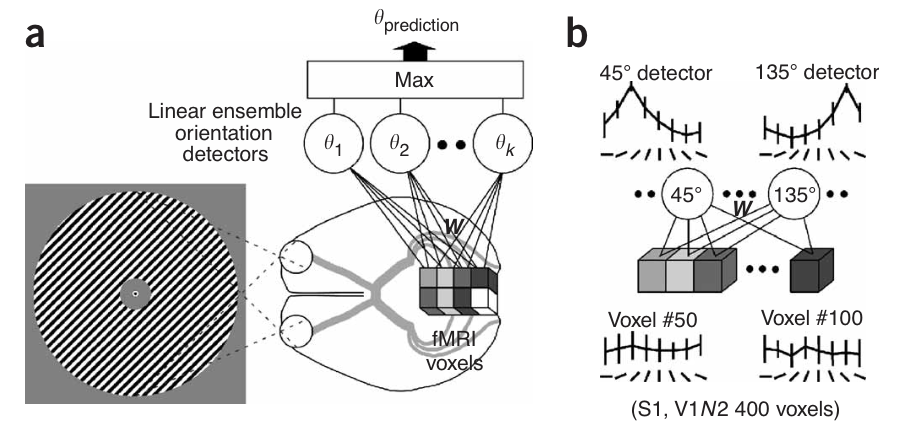

The figure above shows: (a) the orientation decoder that takes as input data from fmri voxels, and outputs a predicted orientation for a stimulus, and (b) shows the orientation selectivity of individual voxels and detectors.

TaskFour human subjects viewed contrast-based oriented image gratings with 8 possible grating orientations. Each subject performed 20-24 trials, for 20-24 trials per grating orientation.

DataVoxels from V1, V2, V3, V4, and VP cortex are shared in this data.

-

dsf from fdsfs last updated 1 decade agoOther

sdfs

-

Hand shape decoding (Rock, Papers, Scissors) from Kamitani Lab, ATR last updated 1 decade agofMRI OtherOverview

This is fMRI data for sample programs of BDTB.

TaskA human subject changed shape of his right hand (rock-, paper- and scissor-shape ) in an fMRI scanner.

DataVoxel from the whole brain with masks for Cerebellum (CB), Primary Motor cortex (M1), and Supplementary Motor area (SMA) are shared in this data.

-

ddd from d last updated 1 decade agoOther

ed

-

Neurotycho: Food Tracking Task (Epidural) from Fujii Lab, RIKEN last updated 1 decade agoECoG Other

Overview.png)

This is ECoG data from Chao ZC, Nagasaka Y, Fujii N (2010): "Long-term asynchronous decoding of arm motion using electrocorticographic signals in monkeys." Frontiers in Neuroengineering 3:3.

TaskThe monkey was tracking food rewards with the hand contralateral to the implant side. Each monkey was trained to reach for food offered by the experimenter at irregular intervals.

DataECoG and motion data were sampled at 1KHz and 120Hz, respectively, with time stamps synchronized. Monkey A had 32-channel electrodes implanted, whereas Monkey K had 64-channel ECoG electrodes.

Data are originally from http://neurotycho.org/food-tracking-task.

-

Neurotycho: Anesthesia and Sleep Task from Fujii Lab, RIKEN last updated 1 decade agoECoG Other

Overview

This is ECoG data from Yanagawa, T., Chao, Z. C., Hasegawa, N., & Fujii, N. (2013). "Large-Scale Information Flow in Conscious and Unconscious States: an ECoG Study in Monkeys." PloS one, 8(11), e80845.

TaskOne monkey was sitting calm with head and arm restrained. ECoG data were recoreded during awake (eyes-opened, eyes-closed), anesthetic and sleeping conditions.

Data128-channel ECoG data were sampled at 1KHz.

Data are originally from http://neurotycho.org/anesthesia-and-sleep-task.

-

Neurotycho: Emotional Movie Task from Fujii Lab, RIKEN last updated 1 decade agoECoG Other

Overview

TaskThe monkey presented with six different movie clips when sitting with the head fixed. No food reward was presented. While the monkey was performing the task, ECoG data and eye-tracking data were recorded simultaneously. There was no motion tracking.

ECoG and eye-tracking data were sampled at 1KHz and 120Hz, respectively, with starting and stopping points synchronized.

DataECoG signal (μV) recorded from electrodeN (1‐128), stimulus (movie) id, and eyetrack data are shared in this data.

Data are originally from http://neurotycho.org/emotional-movie-task -

Neurotycho: Social Competition Task from Fujii Lab, RIKEN last updated 1 decade agoECoG Other

Overview

ECoG data were obtained from one monkey under different hierarchycal conditions by pairing with multiple monkeys.

See http://neurotycho.org/social-competition-task

TaskThere were two monkeys sitting around a table. ECoG data and eye tracking

data were recorded from one monkey. Motion data were captured from both monkeys

and experimenter. For each trial, one food reward was placed on the table.

If one monkey was dominant to the other, he could take the reward withouthesitation.

But, if he was submissive, he could not take the reward because of social suppression.

ECoG data were obtained from one monkey under different hierarchycal conditions by

pairing with multiple monkeys.

ECoG data were sampled at 1KHz. Motion data and eye tracking data were sampled at 120Hz.

Start and stop point of all data were synchronized.

DataECoG data and eye tracking data from one monkey, and motion data from two monkeys and experimenter are shared in this data.

Data are originally from http://neurotycho.org/social-competition-task.

-

Neurotycho: Monkey Sleep Task from Fujii Lab, RIKEN last updated 1 decade agoECoG Other

Overview

These are ECoG data from a monkey who was sitting still with its hard and arms restrained and was then anesthetized so as to lose consciousness.

TaskOne monkey was sitting calm with its head and arms restrained. ECoG data were recorded first in an alert condition, then anesthesia was injected, and the anesthetized condition began.

Data128-channel ECoG data were sampled at 1KHz.

Data are originally from http://neurotycho.org/sleep-task.

-

Neurotycho: Visual Grating Task from Fujii Lab, RIKEN last updated 1 decade agoECoG Other

Overview

These are ECoG data from a visual grating viewing task, where a monkey viewed a screen that alternated between a visual grating of 8 different directions and a blank screen every 2 seconds.

TaskA monkey viewed a screen that alternated between a visual grating selected randomly from 8 different directions (45, 90, 135, 180, 225, 270, 315, 360 degrees) and a blank screen every 2 seconds.

Monkey was sitting with head fixed. His arm motion was also restrained. ECoG data and eye position were recorded. There was a monitor in front of the monkey. Grating pattern that moves in eight direction was presented on the screen. There was no fixation required. Blank and stimulus pattern were switched alternatively every 2 sec. Stimulus events can be decoded from 129th channel by analog value. ECoG data were sampled at 1KHz. One cycle of sinusoid pattern was 27mm with speed at 108mm/sec (4Hz). Distance between monkey and screen was 490mm.

Data128 ECoG channels were recorded at 1000Hz and the positions of the electrodes are shown in K2.png.

Data are originally from http://neurotycho.org/visual-grating-task.